Short Paper as a Proposal to attend the

Workshop on

Shared Environments to Support Face-to-Face Collaboration

at CSCW '2000

Human Computer Interaction Institute

School of Computer Science

Carnegie Mellon University

Pittsburgh, PA 15213

bam@cs.cmu.edu

http://www.cs.cmu.edu/~pebbles

The Pebbles research project is investigating how the functionality and the user interface can be spread across all of the computing and input / output devices that one or more users have available. In this way, the hand-held devices can be used to augment the other computers rather than just being a replacement when other computers are not available. As part of this research, we are looking at how multiple people can use their hand-helds to share control of a main computer running either legacy applications, or custom multi-person applications. We are also looking at how information and control can be fluidly moved between the main screen and the individual hand-held screens.

Keywords: Personal Digital Assistants (PDAs), Palm Pilot, Windows CE, Pocket PC, Single Display Groupware, Pebbles, Multi-Computer User Interfaces, Command Post of the Future (CPOF).

The Pebbles research project (http://www.cs.cmu.edu/~pebbles) has been studying the use of mobile hand-held computers at the same time as other computing devices. As people move about, they will be entering and leaving spaces where there are embedded or desktop computers, such as offices, conference rooms, and even "smart homes." We are exploring the many issues surrounding how to have the user interface, functionality, and information spread across multiple devices that are used simultaneously. For example, there are many ways that a personal digital assistant (PDA), such as a Palm Pilot or Pocket PC device, can serve as a useful adjunct to a personal computer to enhance the interaction with existing desktop applications. New applications may distribute their user interfaces across multiple devices so the user can choose the appropriate device for each part of the interaction. We call these kinds of applications “multi-computer user interfaces (MCUIs).” Important attributes of MCUIs are that they use heterogeneous devices for both input and output, that many of the devices have their own embedded processors, that the devices are all connected and share information synchronously, and that the devices are all co-located and are used by individuals or groups. A key focus of our research is that the hand-held computers are used both as output devices and as input devices to control the activities on the other computers. This research is being performed as part of the Pebbles project. Pebbles stands for: PDAs for Entry of Both Bytes and Locations from External Sources. This paper gives a brief overview of the Pebbles research as it relates to the workshop themes. For more information on Pebbles, see our other publications [Myers 1998] [Myers 2000a] [Myers 2000b], or the Pebbles web site.

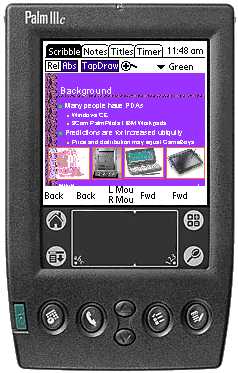

When multiple people are in a meeting, each with their own hand-held, the hand-held might be used to control the main display. This is often called Single Display Groupware (SDG), since all users are sharing the same screen and therefore the same widgets. We created a shared drawing program, named PebblesDraw, that allows everyone to draw simultaneously [Myers 1998]. In creating PebblesDraw (shown in Figure 1), we had to solve a number of interesting problems with the standard widgets such as selection handles, menubars and palettes. Palettes, such as those to show the current drawing mode or the current color, normally show the current mode by highlighting one of the items in the palette. This no longer works if there are multiple people with different modes. Furthermore, the conventional menubars at the top of the window or screen are difficult to use in multi-user situations because they may pop up on top of a different user’s activities. The conventional way to identify different users is by assigning each a different color, but in a drawing program, each user might want to select what color is being used to draw, causing confusion between the color identifying the user, and the color with which the user will draw. We therefore developed a new set of interaction techniques to more effectively support Single Display Groupware.

Figure 1. The PebblesDraw shared drawing tool. Each person has their own cursor shape, and the user's modes are shown in that user's cursor, as well as in the home area at the bottom.

In other cases, users might want to collaborate over legacy applications that only support a single cursor. Our "RemoteCommander" application allows each hand-held to pretend to be the mouse and keyboard of the main computer This brings up a number of interesting issues of floor control, which is the protocol which determines which user has control and how to take turns when multiple people share a limited resource such as a single cursor in a synchronous task. We are in the process of doing some studies about sharing mechanisms, in a highly-collaborative computer-based task, where all the subjects were co-located. This included two techniques where all users have their own cursor, and five floor control techniques for sharing one cursor. The floor control techniques include: having a moderator decide the turn, averaging all inputs together, blocking the other's input while the cursor was in use, explicit release, and explicit grab. We found no previous studies of all these mechanisms. Our study uses a jigsaw puzzle game (see Figure 2) where multiple people can use hand-helds to control either their own or the shared cursor. Our preliminary results suggest that giving everyone a separate cursor works best, and of the floor-control mechanisms, using a time-out to block the interference works best.

Figure 2. Jigsaw puzzle task for the floor control user study.

Another possibility for collaborating on legacy applications is to just "scribble" on top of the screen, and not interfere with the real cursor. Our "Scribble" application for the PDA does this.

We created a Chat program, which allows users to send side messages to each other. A user can switch to PebblesChat on the PDA and send a message to all the other connected users or to a specific user by name. In certain types of meetings, such as negotiations or legal proceedings, it is very important for people to be able to send private messages to people on their "own side" without the other side knowing what is being said.

Our "SlideShowCommander" application [Myers 2000b], allows the presenter to control the presentation using the PDA. While the main computer is driving the public display and running PowerPoint, the hand-held displays a thumbnail of the current slide, the notes of the current slide, and the list of titles (see Figure 3). The user can easily go forward or backwards slides or jump to a particular slide. Scribbling on the thumbnail picture causes the same picture to appear on the main screen. The groupware aspects of this are that multiple people can be running SlideShowCommander at the same time. In the future, we plan to investigate how this might be useful to enhance note-taking and question-asking by the audience.

Figure 3. SlideShow Commander on the Palm and Windows CE.

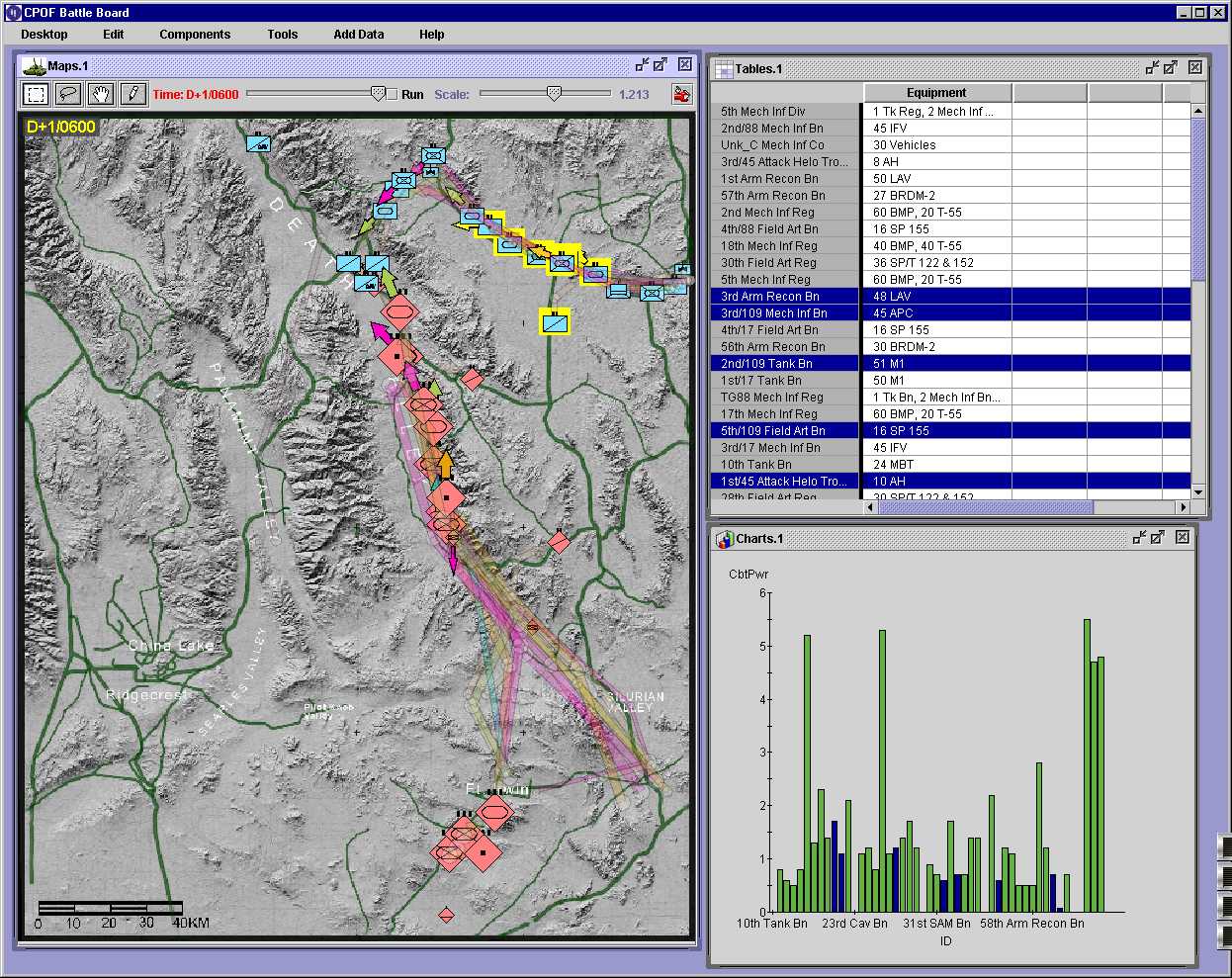

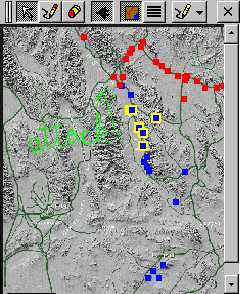

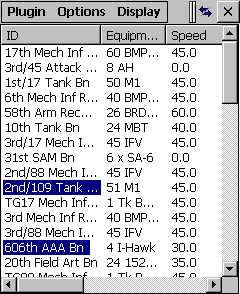

As part of the “Command Post of the Future” project (see http://www.cs.cmu.edu/~cpof), we are investigating the uses of hand-helds in a large shared space. In a military command center, several large displays show maps, schedules, and other visualizations of the current situation that will be useful to the group. Individuals carry a personal PDA. While in the command center, someone might want more details on an item displayed on a large display. Rather than disrupting the main activities and the main display, the PDA can be pulled out, and a special unobtrusive cursor will appear on the main display, so the user can point to the item of interest. Then the user can privately “drill-down” to get the additional specialized information displayed on the PDA. The display of the information is appropriately adjusted to the limited size of the PDA screen.

In cooperation with the MayaViz company, we have created a PDA-based visualization and control program that runs on Windows CE and Palm. On the PDA, you can see a view of a map on which you can scribble and select objects, and a table view of the detailed information. The user can operate connected so operations on the PDA are immediately reflected on the main screen, or disconnected. Figure 4 shows some example screens.

Figure 4. On the top is what appears on the main PC screen, and on the bottom is what is shown on the hand-helds.

We believe in distributing the results of our research, to help collect useful feedback and aid in technology transfer. Our software has been available for about 2 years, and has been downloaded about 25,000 times (see http://www.cs.cmu.edu/~pebbles). The SlideShowCommander program was licensed for commercial sale (see http://www.slideshowcommander.com).

We are currently investigating many different issues of multi-computer user interfaces. We would like to generalize from the specific applications to develop lessons and rules-of-thumb to help guide future user interface designs. With the coming wireless technologies, connecting the PCs and PDAs together will no longer be an occasional event for synchronization. Instead, the devices will frequently be in close, interactive communication. We are pursuing the research needed to help guide the design of interfaces that will run in this environment and span multiple computers, both for individuals and for groups.

Also working on the Pebbles research project have been many other people. See our web site for a complete list.

This research is supported by grants from DARPA and Microsoft, and equipment grants from Palm, Hewlett-Packard, Lucent, Symbol Technologies, and IBM. This research was performed in part in connection with contract number DAAD17-99-C-0061 with the U.S. Army Research Laboratory. The views and conclusions contained in this document are those of the authors and should not be interpreted as presenting the official policies or position, either expressed or implied, of the U.S. Army Research Laboratory or the U.S. Government unless so designated by other authorized documents. Citation of manufacturer’s or trade names does not constitute an official endorsement or approval of the use thereof.

Brad A. Myers is a Senior Research Scientist in the Human-Computer Interaction Institute in the School of Computer Science at Carnegie Mellon University, where he is the principal investigator for various projects including: User Interface Software, Demonstrational Interfaces, Natural Programming, and the Pebbles PalmPilot Project. He is the author or editor of over 200 publications, including the books "Creating User Interfaces by Demonstration" and "Languages for Developing User Interfaces," and he is on the editorial board of five journals. He has been a consultant on user interface design and implementation to 40 companies. Myers received a PhD in computer science at the University of Toronto where he developed the Peridot UIMS. He received the MS and BSc degrees from the Massachusetts Institute of Technology during which time he was a research intern at Xerox PARC. From 1980 until 1983, he worked at PERQ Systems Corporation. His research interests include User Interface Development Systems, user interfaces, Programming by Example, programming languages for kids, Visual Programming, interaction techniques, window management, and programming environments. He belongs to SIGCHI, ACM, IEEE Computer Society, IEEE, and Computer Professionals for Social Responsibility.